BaGuaLu

Overview

Overview

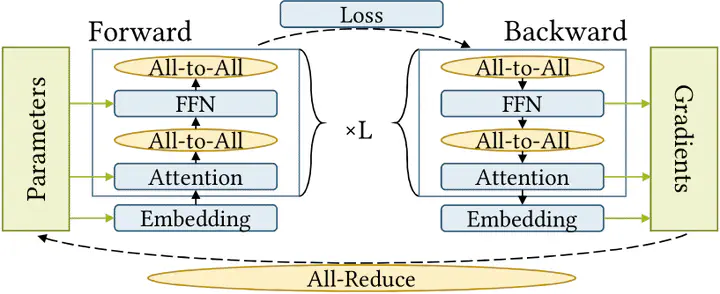

Large-scale pretrained AI models have shown state-of-the-art accuracy in a series of important applications. As the size of pretrained AI models grows dramatically each year in an effort to achieve higher accuracy, training such models requires massive computing and memory capabilities, which accelerates the convergence of AI and HPC. However, there are still gaps in deploying AI applications on HPC systems, which need application and system co-design based on specific hardware features.

To this end, this paper proposes BaGuaLu, the first work targeting training brain scale models on an entire exascale supercomputer, the New Generation Sunway Supercomputer. By combining hardware-specific intra-node optimization and hybrid parallel strategies, BaGuaLu enables decent performance and scalability on unprecedentedly large models. The evaluation shows that BaGuaLu can train 14.5-trillion-parameter models with a performance of over 1 EFLOPS using mixed-precision and has the capability to train 174- trillion-parameter models, which rivals the number of synapses in a human brain.