EinNet derivation

EinNet derivation

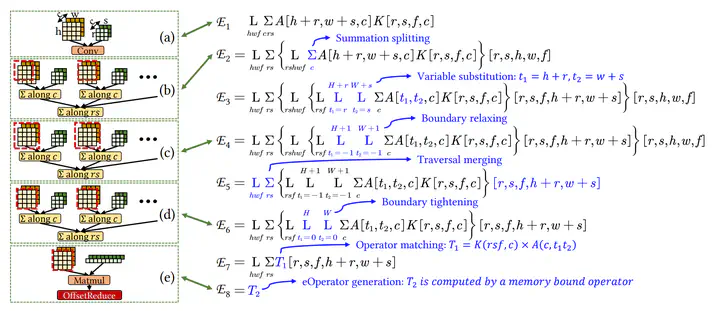

Boosting the execution performance of deep neural networks (DNNs) is critical due to their wide adoption in real-world applications. However, existing approaches to optimizing the tensor computation of DNNs only consider transformations representable by a fixed set of predefined tensor operators, resulting in a highly restricted optimization space. To address this issue, we propose EinNet, a derivation-based tensor program optimizer. EinNet optimizes tensor programs by leveraging transformations between general tensor algebra expressions and automatically creating new operators desired by transformations, enabling a significantly larger search space that includes those supported by prior works as special cases. Evaluation on seven DNNs shows that EinNet outperforms existing tensor program optimizers by up to 2.72× (1.52× on average) on NVIDIA A100 and up to 2.68× (1.55× on average) on NVIDIA V100.