WiseGraph Overview

WiseGraph Overview

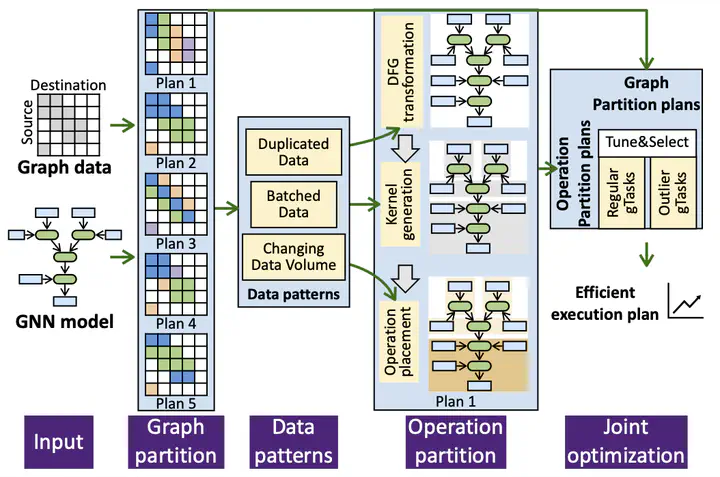

Graph Neural Network (GNN) has emerged as an important workload for learning on graphs. With the size of graph data and the complexity of GNN model architectures increasing, developing an efficient GNN system grows more important. As GNN has heavy neural computation workloads on a large graph, it is crucial to partition the entire workload into smaller parts for parallel execution and optimization. However, existing approaches separately partition graph data and GNN operations, resulting in inefficiency and large data movement overhead. To address this problem, we present WiseGraph, a GNN training framework exploring the joint optimization space of graph data partition and GNN operation partition. To bridge the gap between the two classes of partitions, we propose a workload abstraction tailored to GNN, gTask, which can not only describe existing GNN partition strategies as special cases but also exploit new optimization opportunities. Based on gTasks, WiseGraph effectively generates partition plans adaptive to input graph data and GNN models. Evaluation on five typical GNN models shows that WiseGraph outperforms existing GNN frameworks by 2.04× and 2.22× for single and multiple GPU training. WiseGraph is publicly available at https://github.com/xxcclong/CxGNN-Compute/.